LLMs and the New Data Moat: Defensible AI in a Competitive Market

Every technology wave starts loud, then quietly rewards the teams that do the unglamorous work.

AI is no different.

You can rent, compute and download weights, yet two groups with near identical toolkits can land miles apart in quality.

The simple reason is data.

The model is the engine, the data is the fuel, and those who own the station set the pace.

This article explains how to build a real moat around your systems, why data beats buzz, and what a practical roadmap looks like for a modern Large Language Model stack.

Why Data Moats Matter

Models generalize, but markets reward specificity.

Users do not want a generic answer, they want one that fits their domain, policy, and tone.

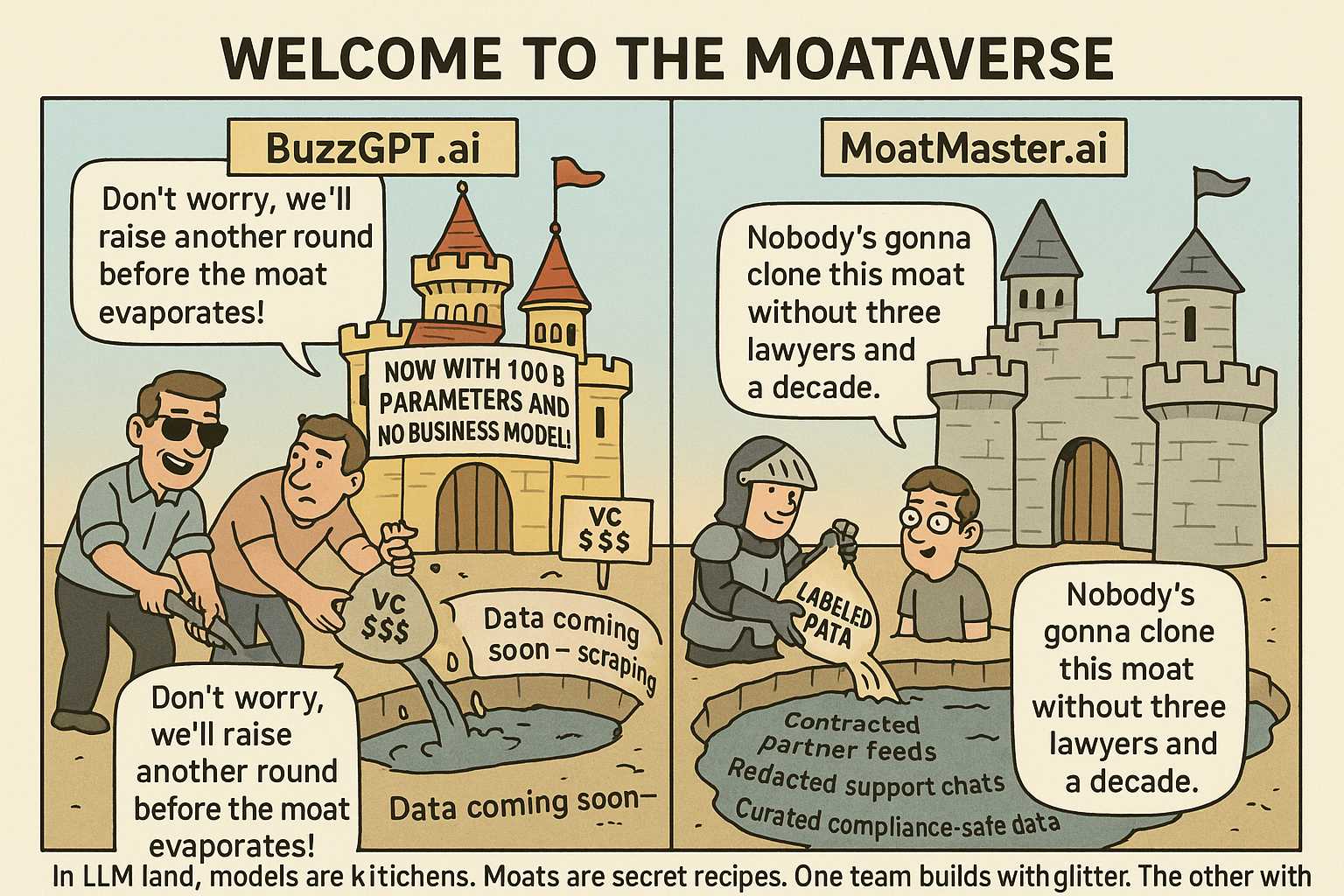

A moat is the mix of rights, processes, and habits that let you serve those needs better than rivals. It is not only size.

It is the difficulty a competitor would face to recreate your corpus and the steady improvement that comes from owning feedback loops.

Training tricks spread.

Data practices compound.

Scarcity and Friction

Scarcity is not counting terabytes. Scarcity is the friction others must overcome to gather the same signals.

If a rival can scrape your sources in an afternoon, you have a puddle, not a moat.

If they need months of consent flows, editorial judgment, and domain expertise, you have a barrier. Treat the subtle signals of quality as assets, because they are.

Quality Beats Quantity

A pantry full of stale flour does not bake a better cake. Deduplicate aggressively, track provenance, and choose labels that capture intent rather than keywords. Include negative examples so the model can say “I do not know” with confidence. That simple behavior preserves trust and reduces support costs.

Sources of a Modern Data Moat

Moats form where your product meets reality. That includes the text customers write, the edits they make, the options they ignore, and the outcomes they confirm. It also includes internal documents, partner feeds under contract, and public data you have curated with unusual care. Each source deserves a clear view of rights, sensitivity, freshness, and expected value. Write that view down. Revisit it as the product evolves.

Proprietary User Interactions

Tickets, chats, briefs, and code reviews encode how your users think. With explicit consent and careful boundaries, these artifacts can improve tone, helpfulness, and adherence to policy in ways generic corpora never will. The guardrails matter. Give customers obvious opt-in and opt-out, separate tenants at all times, and log access like you would money.

Rights, Contracts, and Compliance

Legal clarity is an underrated advantage. Data that is licensed, revocable, or sensitive must be handled with precision. Clear rights let you train and serve without anxiety. Murky rights create rework and risk. Invest in contracts that spell out allowed uses, retention, deletion, and audit paths. Treat compliance as an enabler, not a box to tick. The paradox is simple. The stricter you are, the more data customers will let you use.

Building the Moat Without Boiling the Ocean

Ambition fails when it tries to do everything at once. Pick a narrow surface and win it completely. That gives you a proving ground for policies, tooling, and ROI. From there, expand along adjacent tasks, reusing the same playbook and people. The goal is boring reliability that gets more powerful with each release, not a fireworks show that fades.

Data Inventory Comes First

If you do not know what you have, you cannot protect it or use it well. Build a living catalog that tracks sources, owners, sensitivity, lineage, and license terms. Tag items as trainable or not, public or private, stable or evolving. Make discovery fast, so teams can find approved data in minutes rather than reinvent the wheel.

Feedback Loops That Pay Rent

A moat deepens when every user action can become a training signal. Close the loop by collecting quick ratings, tracking edits, and validating outcomes. Choose signals that are cheap to collect and hard to game. Build tools so product teams can push new instructions, negatives, and evaluation sets without pleading for GPU time.

Measuring Defensibility

Moats are not vibes. They are numbers that move slower for your rivals than for you. The scoreboard should capture product outcomes and data leverage. Track what improves with more data and what only gets cheaper with scale. Make changes small, observable, and reversible, so your metrics teach, not mislead. Document surprises and learn from them.

Product Metrics That Map to Value

Focus on success rate, time to completion, and user confidence. These link directly to retention and revenue. Calibrate them with real tasks that matter, not synthetic puzzles that are easy to game. Publish a simple definition of success and stick to it across teams, so you can compare changes honestly.

Safety and Trust as First Class Signals

Defensibility includes what you refuse to ship. Track jailbreak resilience, PII exposure, and consistency under adversarial prompts. Invest in red teams and pre-commit checks.

The side effect is speed. When safety is baked in, release reviews get faster, not slower.

Customers notice when the product is both capable and well behaved.

Competitors Can Copy Models, Not Your Data

Weights leak, tricks spread, and papers publish. What remains hard to clone is a learning culture attached to a living corpus.

That culture respects user expectations, recycles feedback into better instructions, and treats annotation as craft. Even if rivals train on similar sources, your tuned retrieval, prompts, and evaluation can turn the same ingredients into a better meal. Think of the model as a kitchen and the data as your secret pantry.

Open and Closed in Harmony

You can benefit from open models while guarding your data advantage. Use open components where they shine, then fine tune or instruct on your proprietary distribution. Mix in retrieval that only you can power. Keep careful records so you can reproduce results without vendor melodrama. Openness gives you speed. Your data gives you staying power.

Risks and Anti Moats

Every moat has leaks. Some you can seal. Others you manage. The art is knowing which is which and explaining the tradeoffs without hand waving. The fastest way to ruin a moat is to mistake a supplier’s feature for your own advantage.

The world changes. Terms shift, laws update, and habits evolve. A stale corpus is a quiet bug that bites at scale. Build freshness into your process through scheduled refresh, periodic relabeling, and continuous evaluation. Aim for graceful degradation instead of sudden cliffs, so surprises stay small.

The Road Ahead

The next chapter will favor teams that blend taste with discipline. Taste chooses what not to collect and which results are good enough. Discipline builds pipelines, tests edge cases, and writes down what worked. The combination is rare, which is why it is defensible.

Data moats form when you take that combination seriously and apply it week after week. The glamour is thin. The compounding is real. Keep the circle tight, write things down, and keep pruning scope; clarity beats cleverness when the job is to ship reliable intelligence that earns trust day after day.

Conclusion

A durable moat grows from rights, records, and relentless evaluation. Protect user trust. Pick narrow surfaces and nail them. Treat annotation and measurement as core products. Be generous with open tools, yet stubborn about your corpus.

Competitors can rent the same computer and study the same papers. They cannot easily recreate the decisions your users made, the labels your editors wrote, or the habits your team built. Build for that reality, and your moat will not merely hold, it will deepen.

Samuel Edwards is an accomplished marketing leader serving as Chief Marketing Officer at LLM.co. With over nine years of experience as a digital marketing strategist and CMO, he brings deep expertise in organic and paid search marketing, data analytics, brand strategy, and performance-driven campaigns. At LLM.co, Samuel oversees all facets of marketing—including brand strategy, demand generation, digital advertising, SEO, content, and public relations. He builds and leads cross-functional teams to align product positioning with market demand, ensuring clear messaging and growth within AI-driven language model solutions. His approach combines technical rigor with creative storytelling to cultivate brand trust and accelerate pipeline velocity.