When Will Private, Open Source LLMs Have Their WordPress Moment?

Open source has long been the great equalizer in technology. From Linux to Apache to WordPress, it’s repeatedly lowered the barriers to entry in complex industries—and democratized access in the process. Today, we stand on the edge of a similar transformation in artificial intelligence.

Large Language Models (LLMs), particularly private LLMs, have been dominated by cloud-based APIs—ChatGPT, Claude, Gemini, and others. But beneath the surface, open source LLMs are gaining momentum. They’re smaller, cheaper, more tunable, and increasingly performant. If WordPress democratized content publishing, private LLMs might soon democratize AI deployment.

So the question is no longer if private, open source LLMs will have their WordPress moment—it's when.

The WordPress Explosion: A Blueprint for AI

What WordPress Did to the Web

Before WordPress, building a website was expensive, slow, and entirely dependent on developers. CMS systems like Joomla and Drupal existed but were far from beginner-friendly.

WordPress changed all that. What began in 2003 as a fork of b2/cafelog quickly became the web’s open source standard for content management. Its 5-minute install, plugin ecosystem, and thriving community brought web publishing to the masses. Over time, it supported everything from hobby blogs to ecommerce giants.

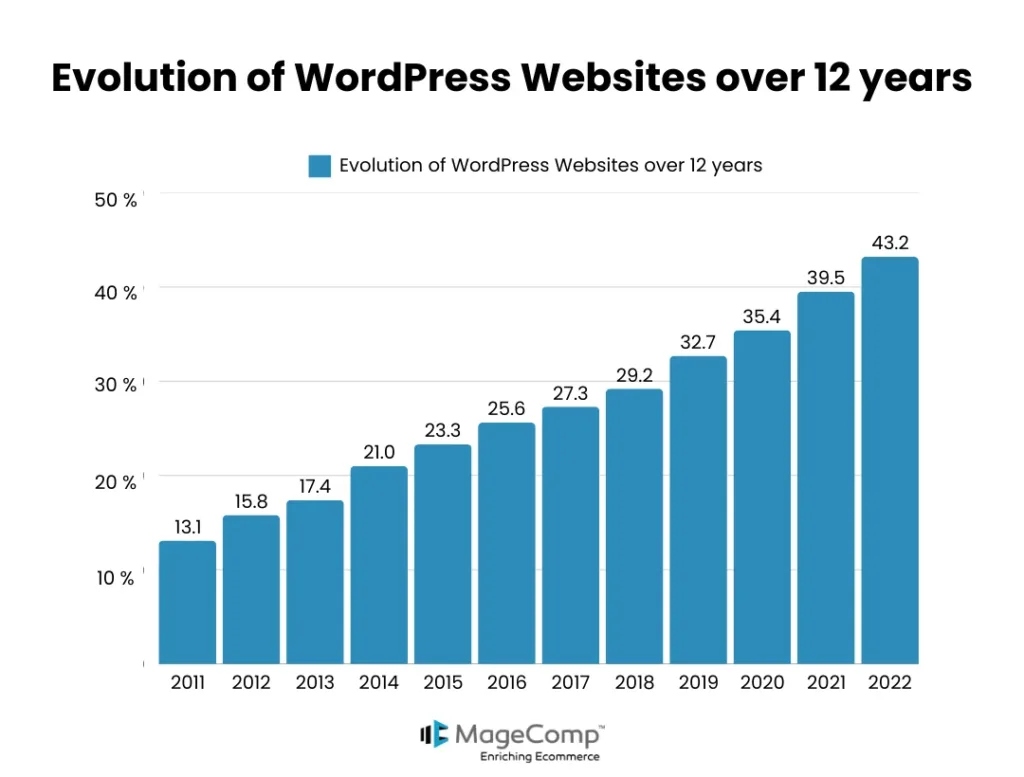

Today, more than 43% of the internet runs on WordPress. That success didn’t come from proprietary software or corporate sales teams—it came from open source simplicity and community compounding.

Why It Worked

- Zero licensing costs

- Developer + non-developer friendly

- Endless extensibility (plugins/themes)

- Self-hosting options + cloud providers

- Global community collaboration

It wasn’t just a product—it was a movement.

The State of Open Source LLMs: Déjà Vu

The Early Days are Always Centralized and Closed

Most AI users today access LLMs through APIs: ChatGPT, Claude, Gemini, or Perplexity. These tools are powerful but come with tradeoffs: usage limits, data privacy concerns, fine-tuning restrictions, and vendor lock-in.

It’s reminiscent of the early internet—before content management systems like WordPress made digital publishing truly accessible.

The Rise of Open Source LLMs

Over the past year, open source LLMs have surged. Models like Meta’s LLaMA 3, Mistral, Mixtral, and Falcon are freely available, easily quantized, and can be deployed locally.

Frameworks like Ollama, LM Studio, and LangChain are making it easier than ever to build applications on top of them. Hugging Face has become the new GitHub for AI models. What we’re seeing is the beginning of a bottom-up movement—a DIY AI renaissance.

Why the WordPress Moment Is Inevitable

1. There Is No Moat

Google has famously said: “There is no moat" (even if custom AI tools attempt to create a moat). They're at least partly right. Once weights are open-sourced and commoditized, the only true differentiators become data, distribution, and deployment UX.

The proliferation of enterprise, private and custom LLM stacks will be ubiquitous.

Like WordPress plugins and themes, open source LLMs allow the community—not just big tech—to iterate, improve, and customize. Expect a wave of domain-specific models that outperform general-purpose giants in their respective verticals.

2. Corporate Demand for Privacy and Control

Enterprises are wary of sending sensitive data through public APIs. From data sovereignty to HIPAA/GDPR compliance, the need for self-hosted, private LLMs is exploding.

With private, open source models:

- You control the weights.

- You define the prompts.

- You own the data.

- You tune the outputs.

This is the AI equivalent of self-hosting a WordPress blog instead of using Medium or Substack.

3. Local Deployment Is Getting Easier

Thanks to model quantization (e.g. GGUF, GPTQ) and optimized runtimes like llama.cpp, even consumer-grade hardware can now run surprisingly capable models.

Small businesses and hobbyists are deploying 7B–13B parameter models on local machines—no API key required.

AI-in-a-Box to Enterprise-Grade LLM Stacks

For Individuals and SMEs: The Raspberry Pi Moment

We are already seeing a wave of small-form-factor LLM “boxes”:

- Ugoos X7/X10

- Jetson Orin Nano

- Mini PCs with consumer GPUs

With preloaded models and a simple GUI, these devices are becoming the shared hosting plans of AI—cheap, accessible, and customizable.

It mirrors the rise of WordPress in the 2000s, when shared hosting providers enabled anyone to run a dynamic site for $5/month.

For Enterprises: Control Without Chaos

But enterprises don’t want a $200 device—they want a secure, compliant, cost-predictable way to deploy powerful models inside their infrastructure.

Here’s what’s happening:

- Turnkey LLM stacks are emerging (e.g. LangChain + Qdrant + llama.cpp + Triton Inference Server).

- Private LLM vendors like LLM.co are offering fully hosted, compliant solutions tailored for healthcare, legal, finance, etc.

- Enterprises can fine-tune, monitor, and version-control models like any other piece of critical software.

This is the WordPress “multisite” moment for AI: a single codebase, multiple isolated tenants, all with centralized governance.

Shared Infrastructure Is Coming

Just like WPEngine or Kinsta simplified WordPress hosting, the same will happen with LLM hosting:

- Vertical-specific hosts for legal (e.g. LAW.co), real estate, finance, healthcare or marketing

- Federated LLMs per organization, with fine-grained access control

- API-first delivery of custom models trained on proprietary knowledge

What Still Needs to Happen

Before the LLM WordPress moment truly arrives, we need:

- GUI-based fine-tuning and deployment tools

- A plugin ecosystem for LLM apps (e.g. CRM bots, finance analysts, contract summarizers)

- Simple RAG integrations for internal documents

- Better UI/UX for chaining, logging, and versioning LLM tasks

- An open-source “Elementor” or “WooCommerce” for AI apps

We're getting close—but the tipping point will come when AI becomes deployable by default, not just by dev teams with GPUs and YAML files.

It’s Not If—It’s When

WordPress didn’t win because it was the most powerful—it won because it was open, usable, and extendable.

Private, open source LLMs are on the same path. When the right mix of deployment tooling, community involvement, and business need hits, we’ll see the dam break.

At that moment, companies won’t just use AI—they’ll own it.

And when that day comes, we’ll look back on this period the same way we look at 2003 in the web’s history—as the beginning of something much, much bigger.

Nate Nead is the founder and CEO of LLM.co where he leads the development of secure, private large language model solutions for enterprises. With a background in technology, finance, and M&A, Nate specializes in deploying on-prem and hybrid AI systems tailored to highly regulated industries. He is passionate about making advanced AI more accessible, controllable, and compliant for organizations worldwide.